Trust, Interrupted: Agentic AI and the Crisis of Trust in Customer Experience

When AI makes decisions users can’t explain or contest, trust breaks. Discover how opaque automation creates emotional friction, and how to fix it.

Agentic AI and the Crisis of Explainability

It starts with a recommendation that doesn’t make sense.

It escalates when the system denies your refund with no explanation.

It breaks down entirely when “help” arrives in the form of a chatbot that cannot deviate from the script.

This is not just bad design. It’s Trust Friction! This is a growing phenomenon at the intersection of automation, opacity, and emotional dissonance.

When AI becomes agentic, making autonomous decisions without user input or clear rationale, it doesn’t just save time. It reshapes the emotional architecture of trust in digital systems.

And we’re paying the price for that silence.

What Is Agentic AI?

Agentic AI is more than a tool. It’s an actor. It makes choices, enforces rules, and takes action on behalf of the system that deployed it.

It routes your support ticket.

It flags your account.

It decides what options you’re allowed to see.

And crucially, it does all of this without needing to explain itself.

The problem isn’t that it’s wrong.

The problem is that you have no idea why it did what it did.

And no way to push back.

Introducing: Trust Friction

Trust Friction is the emotional tension created when a user experiences an outcome they can’t understand, question, or reverse.

It feels like:

“Why did it do that?”

“What am I supposed to do now?”

“Who decided this?”

“Is there even a human behind this?”

Even when the system is technically “correct,” the absence of explainability triggers suspicion, anxiety, and disengagement.

The result?

Higher abandonment rates.

Increased support demand.

Escalated frustration at every interaction layer.

In short: you didn’t just lose the sale—you lost the relationship.

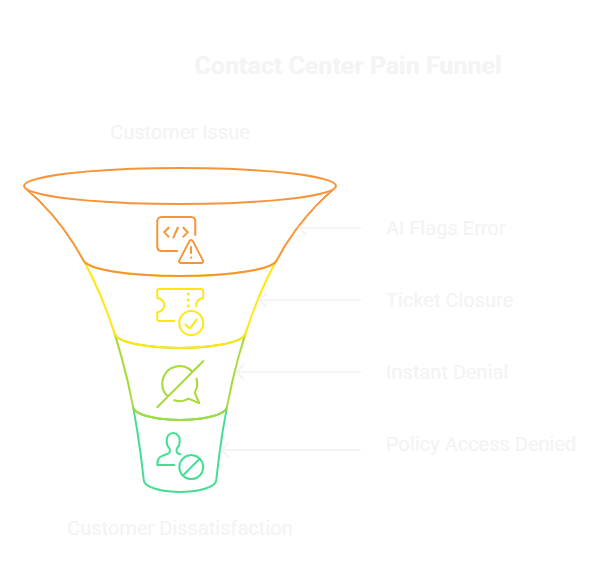

The Contact Center Pain Funnel

Nowhere is Trust Friction more obvious than in support environments, where resolution is expected and emotions are already running high.

Most Common Flashpoints:

Billing disputes: The AI flags something as “user error,” offers no context, and closes the ticket.

Claims processing: A denial arrives instantly, without evidence or timeline, just a final decision.

Moderation appeals: A user is banned or flagged without access to the policy or reviewer.

And when the user tries to escalate?

They’re met with another AI, looping, vague, unhelpful.

Or worse, a human constrained by the AI’s decision tree.

This isn’t efficiency. It’s institutional gaslighting by automation.

Why Opaque AI Creates Structural Distrust

When people can’t understand the decision, they assume the worst:

It’s biased.

It’s rigged.

It’s broken.

It’s intentionally unaccountable.

This perception metastasizes. One bad AI interaction infects every future touchpoint. Even when the next system is helpful, users now approach it defensively.

Trust, once broken by a black box, doesn’t regenerate. It recedes.

What Explainability Actually Requires

We must build explainability layers into agentic systems to reduce Trust Friction, especially in customer-facing roles.

That means:

Human-readable logic: Why this, why now, based on what?

Contestation pathways: Can the user appeal, correct, or override?

Historical visibility: What was seen, flagged, or triggered?

Clear authorship: Was this human-made, model-made, or hybrid?

This doesn’t just build trust, it safeguards dignity.

Call It What It Is: Trust Isn’t a Byproduct. It’s a Feature.

We’ve built AI to optimize for speed, scale, and personalization.

But we’ve failed to optimize for interpretability, the foundation of relational trust.

And now, as systems make more decisions with less context, we’re watching the trust curve collapse under its own weight.

Trust Friction isn’t a UX bug. It’s a systemic design failure.

And until we address it, no model, no matter how powerful, will be believable, let alone trusted.

Coming Next:

“The Contact Center as the Last Trust Bastion” and why humans still matter, and how frontline agents are becoming trust repair techs in the age of automation.