Part V: Beyond the Algorithm, What Needs to Change in Medicine for AI to Actually Help

AI can’t fix healthcare if the system stays broken. We need cultural, institutional, and human change to make tech truly trustable and finally equitable.

By now, the promises and perils of AI in healthcare are clear.

We’ve seen how biased data can reinforce misdiagnosis.

We’ve seen how opaque systems can erase patient voice.

We’ve seen that models often miss exactly what the medical system has always missed because they were trained on its blind spots.

But this series isn’t about giving up.

It’s about looking deeper.

Because the question was never just “Can AI help?”

It’s “What kind of healthcare system are we building and for whom?”

The System Is Still Sick

Artificial intelligence is only as ethical as the system it serves.

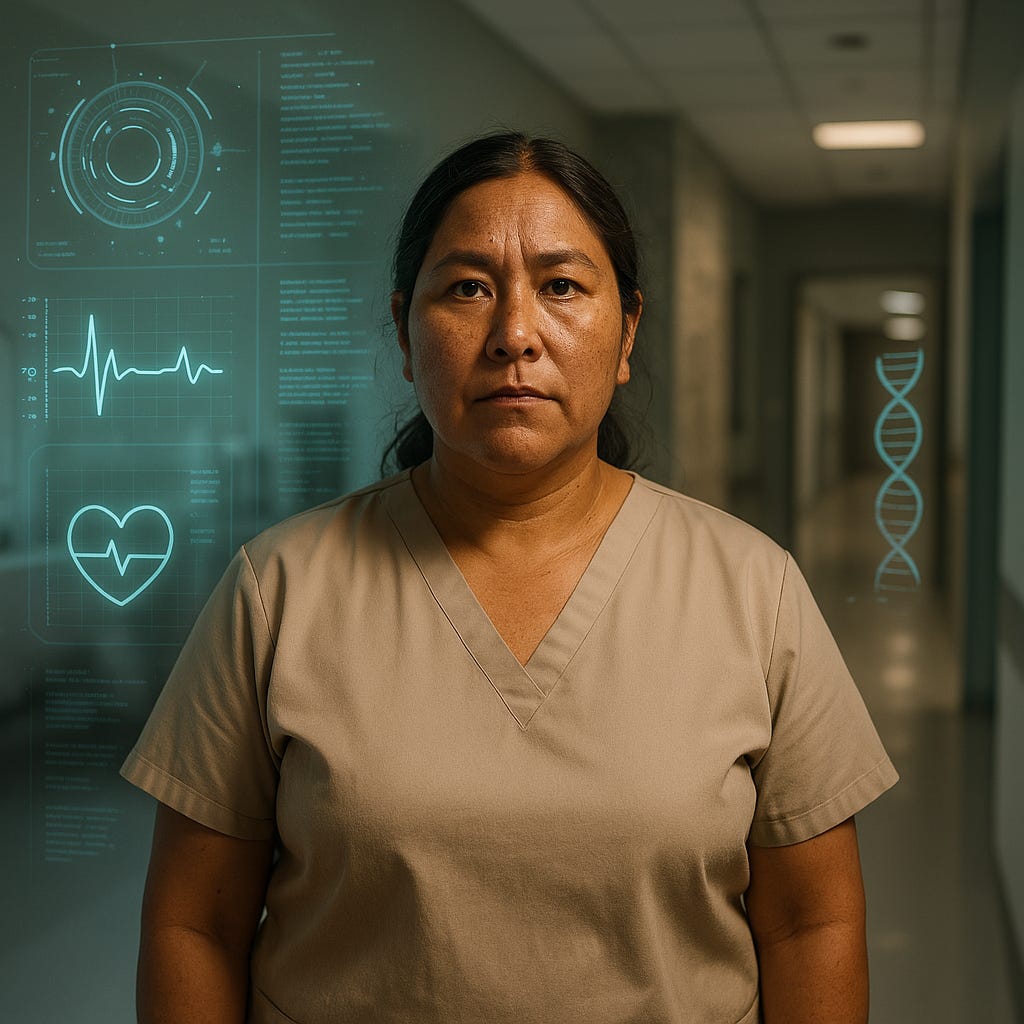

In a healthcare infrastructure built on unequal access, structural racism, underfunded public health, and a long legacy of patient dismissal, especially for women, people of color, disabled people, and LGBTQ+ communities, tech won’t save us.

Not on its own.

Let’s be honest about the state of things:

In the U.S., over 60% of bankruptcies are tied to medical costs.

Black women are three times more likely to die from pregnancy-related causes than white women.

Women with chronic illnesses still face years-long diagnostic delays, especially for conditions like endometriosis, autoimmune disorders, or neurodivergence.

Trans patients face both provider ignorance and algorithmic erasure; most models aren’t even trained on gender-diverse data.

These aren’t bugs. They’re features of a system built around the “ideal patient” white, male, cis, compliant.

So, if AI is dropped into this system without reform, it won’t transform care.

It’ll automate injustice with a cleaner interface.

What AI Can Do

But here’s where it gets more hopeful:

AI isn’t inherently biased. It’s intrinsically reflective.

That means it can reflect something better if we dare to build it.

AI can:

Surface patterns missed by overworked clinicians

Flag diagnostic gaps across demographics

Expand access in rural, underserved areas

Support patients in managing chronic conditions

Provide second-opinion models that help challenge bias at the point of care

And when paired with transparent design, equitable datasets, and human-centered oversight, it can do more than optimize, it can listen.

That’s where the opportunity lives.

But for it to matter, we need a healthcare system willing to change alongside it.

What Needs to Change

Here’s the uncomfortable truth: bias in AI is a symptom.

To treat the disease, we need structural transformation in:

1. Medical Education

Teach future clinicians to recognize gender and racial bias not just as “social issues” but as clinical risks.

Integrate algorithmic literacy into medical training. Clinicians must understand how AI works to use it responsibly.

2. Clinical Research

Require diverse representation in trials, including gender-diverse and neurodiverse populations.

Disaggregate data by race, sex, gender identity, and disability to catch early signs of disparity.

3. Health Policy

Enforce transparency and bias auditing in medical AI systems.

Invest in open-access, inclusive health data initiatives that empower underrepresented communities.

4. Patient Power

Enshrine the right to understand, question, and override AI-driven decisions.

Fund and include patient advocacy groups in the development and evaluation of clinical tech tools.

5. Tech Industry Culture

Move beyond “move fast and break things.”

Redefine success metrics to include equity, not just efficiency or market penetration.

Because the tools are evolving fast, but if the culture doesn’t evolve with them, we’ll just build faster pathways to the same old outcomes.

A Quick Recap of the Series

Part I: The Diagnosis Delay — Unpacked the long history of women being dismissed, misdiagnosed, and minimized in medicine—and the potential of AI to bypass those failures.

Part II: Garbage In, Garbage Out — Exposed how training AI on biased health data risks scaling injustice, especially around race, gender, and chronic illness.

Part III: Can We Build a Better Machine? — Showcased emerging research in equitable model design, bias audits, and intersectional AI.

Part IV: AI You Can Argue With — Made the case for transparency, explainability, and the human right to question machine-driven decisions.

Toward a More Trustable Future

Technology won’t rebuild trust on its own.

But people can.

Healthcare trust isn’t just about outcomes. It’s about the process. It’s about whether people feel seen, heard, and respected in every step of their care.

So yes, build the AI. But don’t forget the clinic, the waiting room, the bedside, the lived experience, and the voices that were never in the room when the systems were first built.

Because the transformation won’t come from silicon alone.

It will come from the courage to change how we define care.

It will come from re-centering the people medicine forgot.

The tech is promising. But the revolution will be human.